Handbook/Testing

Also see Tutorial Model-Based Testing.

State Space Coverage Analyses

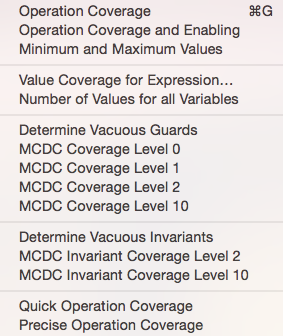

ProB provides various ways to analyse the coverage of the state space of a model. In ProB Tcl/Tk these features reside in the Coverage submenu of the Analyse menu:

These coverage commands all operate on the state space of the B model, i.e., those states that ProB has visited via animation or model checking from the initial states. Note: if you perform constraint-based checks, ProB may add additional states to this state space, which are not necessarily reachable from the initial states. Note: these commands do not drive the model checker and work on the currently explored state space; if the state space is incomplete the commands may obviously miss covered values in the unexplored part. Finally, the larger the state space, the more time these commands will take.

Here is a brief overview of the main commands:

- Operation Coverage: determine which operations (aka events for Event-B or actions for TLA) are covered in the state-space and compute how often each operation is enabled

- Minimum and Maximum Values: determine for each variable and constant a minumum and maximum value that is reached. This also detects whether just a single value was encountered. This command is particularly useful when model checking unexpectedly runs for very long or seems to run forever: here one can often spot individual variables which grow in an unbounded fashion.

- Value coverage for Expression: this allows the user to enter an expression, which is computed in every state of the state space; all values are then displayed in a table along with the possible values, how often these values have been encountered and a witness state id, giving you one possible witness state for this value.

- Number of covered values for variables: computes for each variable, how many different values it has taken on in the state space. Again, this is useful to diagnose state space explosion.

- Precise Operation Coverage: will check which operations have been covered in the current state space; for those operations that have not been covered, the constraint solver is called to determine whether the operation is actually feasible given the invariant (is there a state satisfying the invariant which enables the operation). If an operation is infeasible, it can never be reached (unless we reach a state violating the invariant).

The commands to determine vacuous guards and invariants rely on MC/DC coverage, which is detailed below.

MC/DC Coverage

MC/DC (Modified Condition/Decision Coverage) is a coverage criterion which is used in avionics. One core idea is ensure that for every condition in our code or model we generate at least one test where the condition independently plays an observable role in the outcome of a decision. In other words, if the condition were to be true rather than false (or vice versa) the decision would be changed. In still other words, we are trying to find an execution context where the result of the particular condition under test is crucial for the observable outcome.

In the context of B and Event-B, one question is what constitutes a condition and what constitutes a decision. We view a condition to be an atomic predicate (e.g., x>y) not involving logical connectives (&, or, not, =>, ⇔). There is, however, the possibility of treating a bigger predicate as atomic (e.g., an entire guard of an event). The reason for this is to provide better feedback to the user and to reduce the number of coverage criteria if desired. At the moment, we also consider a universally or existentially quantified formula to be a condition. A decision constitutes the fact whether an event is enabled or not. However, we also allow MC/DC to be applied in other contexts, e.g., to analyse invariants; in that setting a decision is whether an invariant is true or false.

We have implemented a MC/DC coverage analysis which examines all visited states and computes the MC/DC coverage for a series of predicates under consideration. Currently MC/DC coverage is used in two ways:

- for the coverage for the invariants, where the invariant is expected to be true (i.e., the analysis does not expect to find states where the invariant is false)

- for the coverage for the event guards. Here it is expected that every event can be enabled or disabled.

The algorithm is parametrised by an optional depth bound (called level in the coverage menu of ProB Tcl/Tk): if the depth bound is reached, then all sub-predicates are considered to be atomic decisions. The analysis can be used to measure the coverage of tests, but can also be used after an exhaustive model check to detect vacuous guards and vacuous invariants.

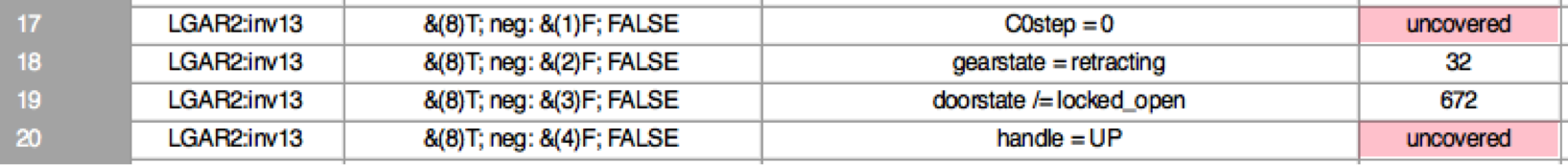

The MC/DC analysis has been integrated into ProB and has been successfully applied to various case studies. Below is a screenshot of part of the output for an early version of the ABZ landing gear case study. It shows that parts of the invariant inv13 not(C0step = 0 & (gearstate = retracting & doorstate /= locked_open & handle = UP)) cannot be set independently to false. This highlights a redundancy in the invariant; indeed the simpler to understand (but stronger) invariant not(gearstate = retracting & doorstate /= locked_open) holds.

Test Case Generation

Introduction

Testing aims at finding errors in a system or program. A set of tests is also called a test suite. Test case generation is the process of generating test suites for a particular system. Model-based Testing (MBT) is a technique to generate test suites for a system from a model describing the system. One usually tries to generate test suite which satisfies a given coverage criterion, e.g., ensuring that parts of the model are exhaustively exercised. Indeed, exhaustive testing of the implemented system is usually infeasible (because it would require infinitely many or way too many test cases), but one would like to increase the likelihood that the selected test suite uncovers errors in the implemented system.

In our case we have that

- a model is a formal model written in B, Event-B or even TLA+ and Z

- a test-case is a sequence of operations (or events) along with parameter values for the operations and concrete values for the constants and initial values of the formal model. One can also specify that a valid test case must end in a given class of states (specified by a predicate on the formal model's state).

- the coverage criterion is currently restricted to operation/event coverage, i.e., trying to ensure that every operation/event of the model is exercised by at least one test case.

ProB has two main algorithms to find suites of test cases:

- model-checking based (invoked, e.g., using the mcm_tests command of probcli) and

- constraint-based (invoked, e.g., using the cbc_tests command of probcli; more details can be found below)

The former will run the model checker to generate the state space of the formal model, and will stop when the coverage criterion has been satisfied. The full state space of the model contains all possible initialisations of the machine, along with all possible values of the constants. The latter will use ProB's constraint solver to generate feasible sequences of events in a breadth-first fashion and will also stop when the coverage criterion has been satisfied. (You may want to examine the Tutorial_Model_Checking,_Proof_and_CBC in this context.) So what are the differences:

- the constraint-based approach will instantiate the constants and parameters of the operations as required by the target sequence. It will not examine every possible valuation for the constants and initial values of the machine, nor every possible value for the parameters of the operation.

- the constraint-based approach hence does not construct the full-state space (aka a graph), but rather constructs a tree of feasible execution paths. The constraint-based approach cannot detect cycles; even if the state space is finite it may run forever in case an operations cannot be covered (because it is infeasible).

Model-Checking-Based Test Case Generation

Model-checking-based test case generation will build the model's state space, starting from the initial states, until the coverage criterion is satisfied. ProB uses a "breadth-first" approach to search for the test cases.

How to run

We will use the following B Machine as an example, which is stored in a file called simplecounter.mch.

MACHINE SimpleCounter

DEFINITIONS MAX==4

VARIABLES count

INVARIANT

count: 1..MAX

INITIALISATION count := 1

OPERATIONS

Increase(y) = PRE y: 1..(MAX-count) THEN

count := count+y

END;

Reset = BEGIN count := 1 END

END

To start the test case generation there are two alternatives: Using the tcl/tk-application or the command line.

From the command line

From the command line there are two commands to configure the test case generation:

-mcm_tests Depth MaxStates EndPredicate File

generate test cases with maximum length Depth, exploring MaxStates

states; the last state satisfies EndPredicate and the

test cases are written to File

-mcm_cover Operation(s)

Specify an operation or event that should be covered when generating

test cases. Multiple operations/events can be specified by seperating

them by comma or by using -mcm_cover several times.

When all requested operations are covered by test cases within maximum length Depth, the algorithm will still explore the complete state space up to that maximum distance Depth from the initialisation. It outputs all found traces that satisfy the given requirements. The algorithm stops when it either

- has covered all required operations/events with(/and?) the current search depth

- or has reached the maximum search depth or maximum number of states to explore.

Example: To generate test cases that cover the event Increase in the machine above, leading to a state where count = MAX-1 is true, the explored paths do not have a depth of more than 5 operations and not more than 2000 states are explored, we would specify this as follows, where we have a timeout of 2000 ms for each explored trace:

./probcli.sh simplecounter.mch -mcm_tests 5 2000 count=MAX-1 test_results.xml -mcm_cover Increase

The generated test cases are stored in a file called test_results.xml. You can provide the empty filename "" in which case no file is generated. Providing the same as `EndPredicate` will only lead to test cases of length 1.

As of version 1.6.0, the operation arguments are also written to the XML file. The preference INTERNAL_ARGUMENT_PREFIX can be used to provide a prefix for internal operation arguments; any argument/parameter whose name starts with that prefix is considered an internal parameter and not shown in the trace file. Also, as of version 1.6.0, the non-deterministic initialisations are shown in the XML trace file: all variables and constants where more than one possible initialisation exists are written into the trace file, as argument of an INITIALISATION event.

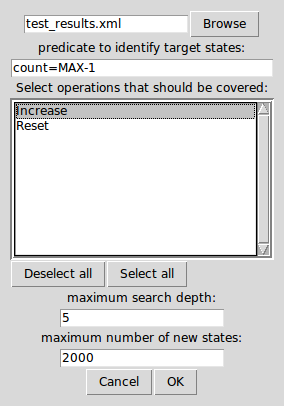

In the tcl/tk-application

In the tcl/tk-application the test case generation can be started by choosing Analyse > "Testing" > "model-checking-based test case generation...". In the appearing window one can set the same parameters as described for the command line.

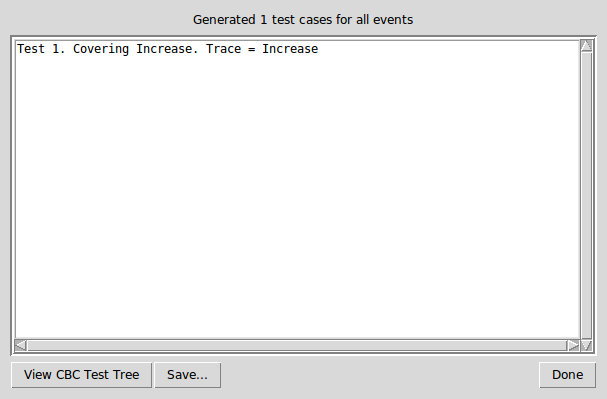

After a short while you will see the following window:

You cannot look at the test cases in ProB. Instead you can open the file you just saved the results to, which looks like this:

<extended_test_suite>

<test_case id="1" targets="[Increase]">

<global>

<step id="1" name="Increase">

<value name="y">4</value>

</step>

</global>

</test_case>

</extended_test_suite>

Constraint-based Test Case Generation

Constraint based test case generation allows to explore the state space for a machine and generate traces of operations that cover certain user defined operations and meet a given condition to end the search. (See also the bounded model checker of ProB which uses this technique to find invariant violations.)

When should one use the constraint-based test case generator:

- when one has a large number of possible values for the constants

- when one has a large number of possible values for the initial values

- when one has a large number of possible values for the parameters of the operations

- when the lengths of the individual test-cases remains relatively low; indeed, the complexity of the constraint solving increases with the length of the test-case and the number of candidate test cases also typically grow exponentially with the depth of the feasible execution paths.

Example: When Constraint-based Test Case Generation is better

Here is an example which illustrates when constraint-based test case generation is better.

MACHINE Wiki_Example1 CONSTANTS n PROPERTIES n:NATURAL1 VARIABLES x, y INVARIANT x: 0..n & y:0..n INITIALISATION x :: 1..n || y := 0 OPERATIONS Sety(yy) = PRE yy:1..n THEN y:=yy END; BothOverflow = SELECT x=y & y> 255 THEN x,y := 0,0 END END

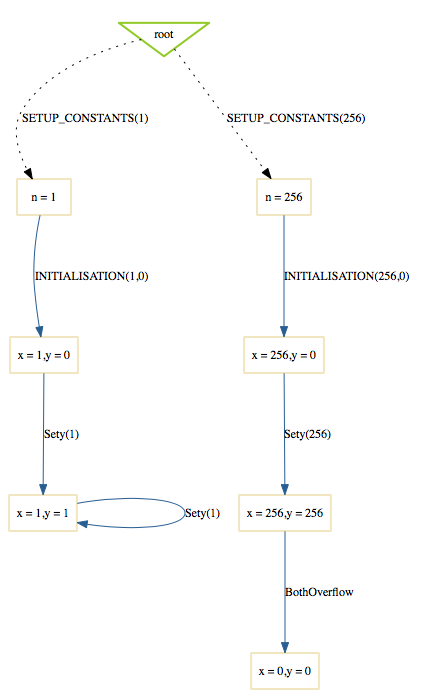

The state space of this machine is infinite, as we have infinitely many possible values for n. For large values of n, we also have many possible initialisations for x and may possible parameter values for the Sety operation. This gives us an indication that the constraint-based test-case generation algorithm is better suited. Indeed, it will very quickly generate two test cases:

- SETUP_CONSTANTS(1) ; INITIALISATION(1,0) ; Sety(1)

- SETUP_CONSTANTS(256) ; INITIALISATION(256,0) ; Sety(256) ; BothOverflow

For the second test, the constraint solver was asked to find values for n, x, y, and the parameter yy so that the following sequence is feasible:

- SETUP_CONSTANTS(n) ; INITIALISATION(x,y) ; Sety(yy) ; BothOverflow

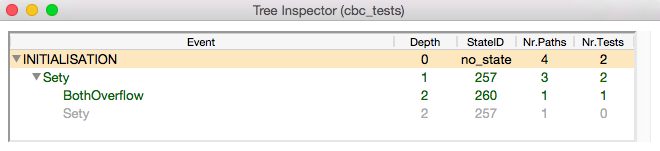

The first solution it found was n=256,x=256,y=0,yy=256. The whole test-case generation process takes less than a second. The generated tree can be visualised by ProB:

One can see that the only path of length 1 (not counting the INITIALISATION step) consists of the operation Set. The possible paths of length 2 are Set;BothOverflow and Set;Set. (The latter is grayed out as it does not provide a new test case.) Within ProB's state space the following states are generated by the test case generator. As one can see only the values n=1 and n=256 were generated, as driven by ProB's constraint solver:

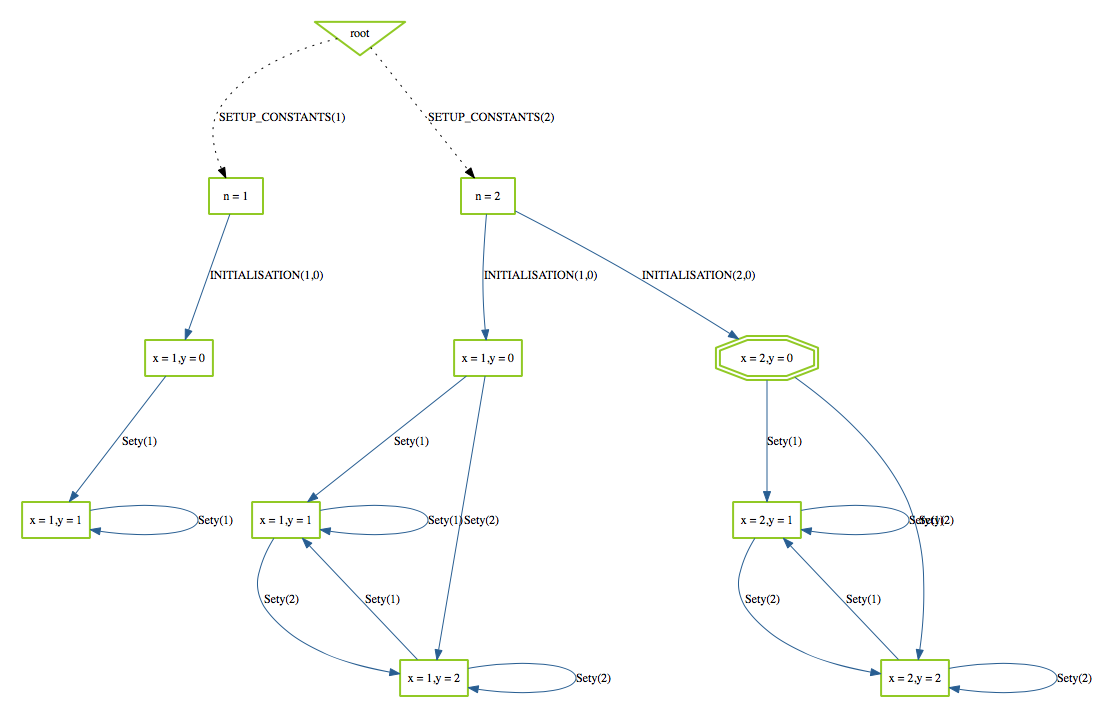

Finding a trace such that BothOverflow is enabled using the model checker will take much more time. Indeed, first one has to set MAXINT to at least 256 so that the value n=256 will eventually be generated. Then one has to set MAX_INITIALISATIONS also to at least 256 so that this value will actually be inspected by the model checker. Finally one has to set MAX_OPERATIONS also to at least 256 to generate yy=256; leading to a (truncated) state space of at least 16,777,216 states. Below is the state space just for the values n=1 and n=2 (which contains no state where BothOverflow is enabled):

How to run

We will again use the machine simplecounter.mch. To start the test case generation there are three alternatives: Using the tcl/tk-application or using the command line by either providing all settings as command line arguments or in a test description file.

From the command line

From the command line there are six relevant settings to configure the test case generation:

-cbc_tests Depth EndPredicate File

generate test cases by constraint solving with maximum

length Depth, the last state satisfies EndPredicate

and the test cases are written to File

-cbc_cover Operation

when generating CBC test cases, Operation should be covered. Each

operation to be covered needs to be specified separately.

-cbc_cover_match PartialOpName

just like -cbc_cover but for all operations whose name contains "PartialOpName"

-cbc_cover_final

specifies that the events specified above should only be used as final events in test-cases.

This option can lead to a considerable reduction in running time of the algorithm.

-p CLPFD TRUE

flag to enable the CLPFD constraint solver to search the state space, which is highly recommended.

-p TIME_OUT x

time out in milliseconds to abort the exploration of each possible trace

Example: To generate test cases that cover the event Increase in the machine above, leading to a state where count = MAX-1 is true and the explored paths do not have a depth of more than 5 operations we would specify this as follows, where we use CLPFD and have a timeout of 2000 ms. for each explored trace:

./probcli.sh simplecounter.mch -cbc_tests 5 count=MAX-1 test_results.xml -cbc_cover Increase -p CLPFD true -p TIME_OUT 2000

The generated test cases are stored in a file called test_results.xml. Just as with model-checking-based test case generation you can provide the empty filename

''

, in which case no file is generated, and an empty EndPredicate that will only lead to test cases of length 1.

With a test description file

The configuration for the test case generation can also be provided as an XML file. The format is shown below:

<test-generation-description>

<output-file>OUTPUT FILE NAME</output-file>

<event-coverage>

<event>EVENT 1</event>

<event>EVENT 2</event>

</event-coverage>

<target>TARGET PREDICATE</target>

<!-- the parameters section contains parameters that are very ProB-specific -->

<parameters>

<!-- the maximum depth is the maximum length of a trace of operations/event,

the algorithm might stop earlier when all events are covered -->

<maximum-depth>N</maximum-depth>

<!-- any ProB preference can be set that is listed when calling "probcli -help -v" -->

<!-- other probably interesting preferences are MININT, MAXINT and TIME_OUT -->

</parameters>

</test-generation-description>

Example: For our example the description file would look as follows.

<test-generation-description>

<output-file>test_results.xml</output-file>

<event-coverage>

<event>Increase</event>

</event-coverage>

<target>count = MAX - 1</target>

<parameters>

<maximum-depth>5</maximum-depth>

<!-- Please note: TIME_OUT (in milliseconds) is not a global time out, it is per trace -->

<preference name="CLPFD" value="true"/>

<!-- Please note: TIME_OUT (in milliseconds) is not a global time out, it is per trace -->

<preference name="TIME_OUT" value="2000"/>

</parameters>

</test-generation-description>

Assuming the test description above is stored in file named simple_counter_test_description.xml, we start the test case generation with the following call.

./probcli.sh simplecounter.mch -test_description simple_counter_test_description.xml

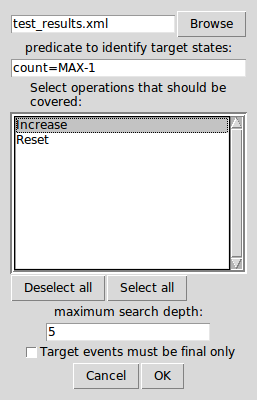

In the tcl/tk-application

In the tcl/tk-application the test case generation can be started by choosing Analyse > Testing > constraint-based test case generation.... In the appearing window one can set the same parameters as described for the command line.

After a short while you will see the following window:

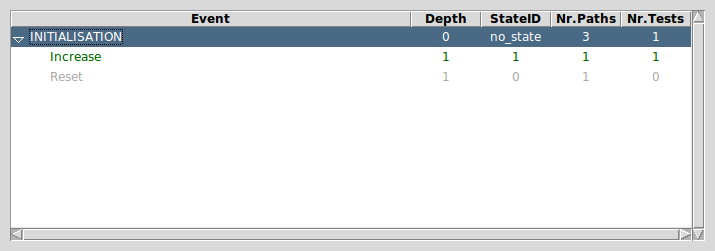

Clicking on View CBC Test Tree will open a window showing the test cases. In this case there is only one test case generated. After just one execution of Increase the EndPredicate count=MAX-1 is satisfied and all operations that we specified are covered, hence the test case's depth is 1.

All three execution variants lead to the same output in the file test_results.xml:

<extended_test_suite>

<test_case>

<initialisation>

<value type="variable" name="count">1</value>

</initialisation>

<step name="Increase">

<value name="y">4</value>

<modified name="count">5</modified>

</step>

</test_case>

</extended_test_suite>

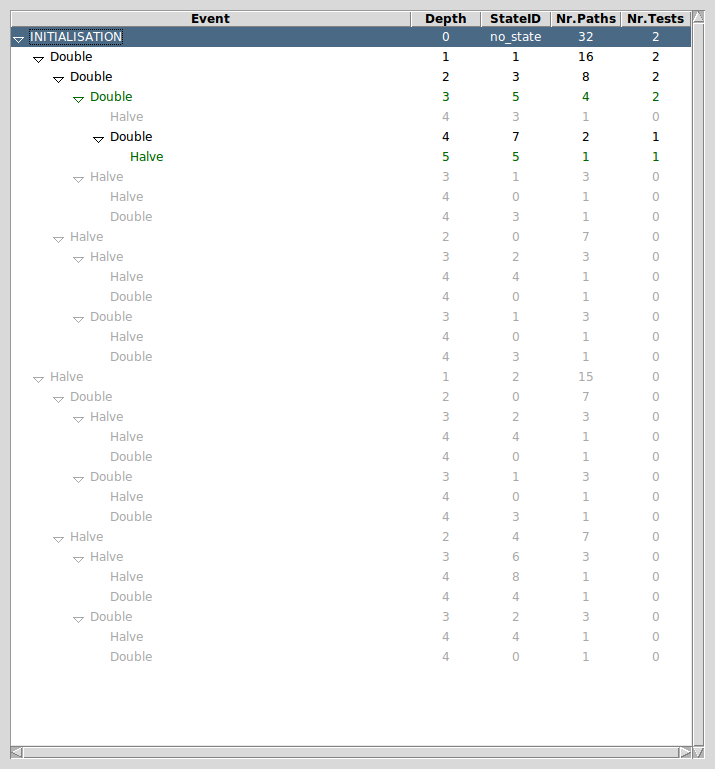

Another model, for which the given EndPredicate cannot be satisfied after one step, leads to the following test cases and tree structure of possible traces:

<extended_test_suite>

<test_case>

<initialisation>

<value type="variable" name="counter">8</value>

</initialisation>

<step name="Double" />

<step name="Double" />

<step name="Double" />

</test_case>

<test_case>

<initialisation>

<value type="variable" name="counter">8</value>

</initialisation>

<step name="Double" />

<step name="Double" />

<step name="Double" />

<step name="Double" />

<step name="Halve" />

</test_case>

</extended_test_suite>

References

Constraint-based test-case generation using enabling analysis:

- Aymerick Savary, Marc Frappier, Michael Leuschel. Model-Based Robustness Testing in Event-B Using Mutation. Proceedings SEFM'2015.

Enabling Analysis:

- Ivaylo Dobrikov, Michael Leuschel. Enabling Analysis for Event-B. Sci. Comput. Program. 158: 81-99. 2018.

Explicit test-case generation:

- Sebastian Wieczorek, Vitaly Kozyura, Andreas Roth, Michael Leuschel, Jens Bendisposto, Daniel Plagge, Ina Schieferdecker. Applying Model Checking to Generate Model-Based Integration Tests from Choreography Models. Proceedings FATES 2009, TestCom 2009.