Test Case Generation: Difference between revisions

| Line 179: | Line 179: | ||

Enabling Analysis: | Enabling Analysis: | ||

* Ivaylo Dobrikov, Michael Leuschel. [https://www.sciencedirect.com/science/article/abs/pii/S0167642317301624?via%3Dihub Enabling Analysis for Event-B]. | * Ivaylo Dobrikov, Michael Leuschel. [https://www.sciencedirect.com/science/article/abs/pii/S0167642317301624?via%3Dihub Enabling Analysis for Event-B]. Sci. Comput. Program. 158: 81-99. 2018. | ||

Explicit test-case generation: | Explicit test-case generation: | ||

* Sebastian Wieczorek, Vitaly Kozyura, Andreas Roth, Michael Leuschel, Jens Bendisposto, Daniel Plagge, Ina Schieferdecker. [https://rdcu.be/b4UxY Applying Model Checking to Generate Model-Based Integration Tests from Choreography Models]. Proceedings FATES 2009, TestCom 2009. | * Sebastian Wieczorek, Vitaly Kozyura, Andreas Roth, Michael Leuschel, Jens Bendisposto, Daniel Plagge, Ina Schieferdecker. [https://rdcu.be/b4UxY Applying Model Checking to Generate Model-Based Integration Tests from Choreography Models]. Proceedings FATES 2009, TestCom 2009. | ||

Revision as of 09:31, 15 June 2020

Introduction

Testing aims at finding errors in a system or program. A set of tests is also called a test suite. Test case generation is the process of generating test suites for a particular system. Model-based Testing (MBT) is a technique to generate test suites for a system from a model describing the system. One usually tries to generate test suite which satisfies a given coverage criterion, e.g., ensuring that parts of the model are exhaustively exercised. Indeed, exhaustive testing of the implemented system is usually infeasible (because it would require infinitely many or way too many test cases), but one would like to increase the likelihood that the selected test suite uncovers errors in the implemented system.

In our case we have that

- a model is a formal model written in B, Event-B or even TLA+ and Z

- a test-case is a sequence of operations (or events) along with parameter values for the operations and concrete values for the constants and initial values of the formal model. One can also specify that a valid test case must end in a given class of states (specified by a predicate on the formal model's state).

- the coverage criterion is currently restricted to operation/event coverage, i.e., trying to ensure that every operation/event of the model is exercised by at least one test case.

ProB has two main algorithms to find suites of test cases:

- model-checking based (invoked, e.g., using the mcm_tests command of probcli) and

- constraint-based (invoked, e.g., using the cbc_tests command of probcli; more details can be found below)

The former will run the model checker to generate the state space of the formal model, and will stop when the coverage criterion has been satisfied. The full state space of the model contains all possible initialisations of the machine, along with all possible values of the constants. The latter, will use ProB's constraint solver to generate feasible sequences of events in a breadth-first fashion and will also stop when the coverage criterion has been satisfied. (You may want to examine the Tutorial_Model_Checking,_Proof_and_CBC in this context.) So what are the differences:

- the constraint-based approach will instantiate the constants and parameters of the operations as required by the target sequence. It will not examine every possible valuation for the constants and initial values of the machine, nor every possible value for the parameters of the operation.

- the constraint-based approach hence does not construct the full-state space (aka a graph), but rather constructs a tree of feasible execution paths. The constraint-based approach cannot detect cycles; even if the state space is finite it may run forever in case an operations cannot be covered (because it is infeasible).

When should one use the constraint-based test case generator:

- when one has a large number of possible values for the constants

- when one has a large number of possible values for the initial values

- when one has a large number of possible values for the parameters of the operations

- when the lengths of the individual test-cases remains relatively low; indeed, the complexity of the constraint solving increases with the length of the test-case and the number of candidate test cases also typically grow exponentially with the depth of the feasible execution paths.

Example 1 (Constraint-based Test Case Generation better)

Here is an example which illustrates when constraint-based test case generation is better.

MACHINE Wiki_Example1 CONSTANTS n PROPERTIES n:NATURAL1 VARIABLES x, y INVARIANT x: 0..n & y:0..n INITIALISATION x :: 1..n || y := 0 OPERATIONS Sety(yy) = PRE yy:1..n THEN y:=yy END; BothOverflow = SELECT x=y & y> 255 THEN x,y := 0,0 END END

The state space of this machine is infinite, as we have infinitely many possible values for n. For large values of n, we also have many possible initialisations for x and may possible parameter values for the Sety operation. This gives us an indication that the constraint-based test-case generation algorithm is better suited. Indeed, it will very quickly generate two test cases:

- SETUP_CONSTANTS(1) ; INITIALISATION(1,0) ; Sety(1)

- SETUP_CONSTANTS(256) ; INITIALISATION(256,0) ; Sety(256) ; BothOverflow

For the second test, the constraint solver was asked to find values for n, x, y, and the parameter yy so that the following sequence is feasible:

- SETUP_CONSTANTS(n) ; INITIALISATION(x,y) ; Sety(yy) ; BothOverflow

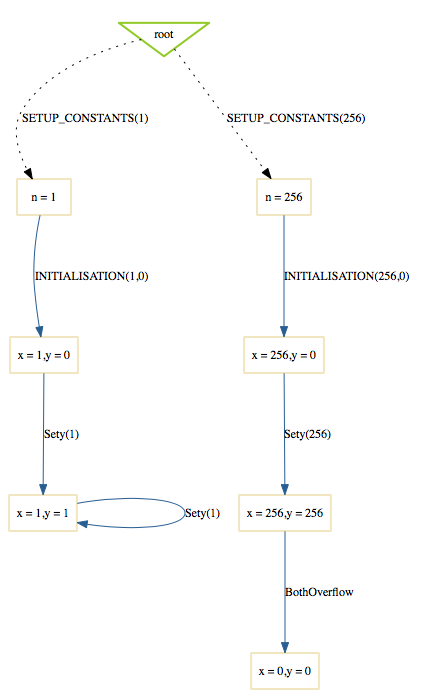

The first solution it found was n=256,x=256,y=0,yy=256. The whole test-case generation process takes less than a second. The generated tree can be visualised by ProB:

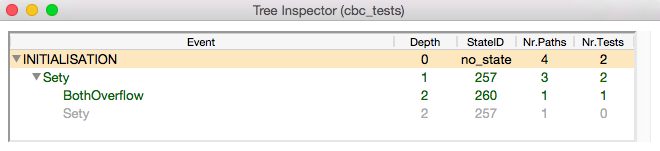

One can see that the only path of length 1 (not counting the INITIALISATION step) consists of the operation Set. The possible paths of length 2 are Set;BothOverflow and Set;Set. (The latter is grayed out as it does not provide a new test case.) Within ProB's state space the following states are generated by the test case generator. As one can see only the values n=1 and n=256 were generated, as driven by ProB's constraint solver:

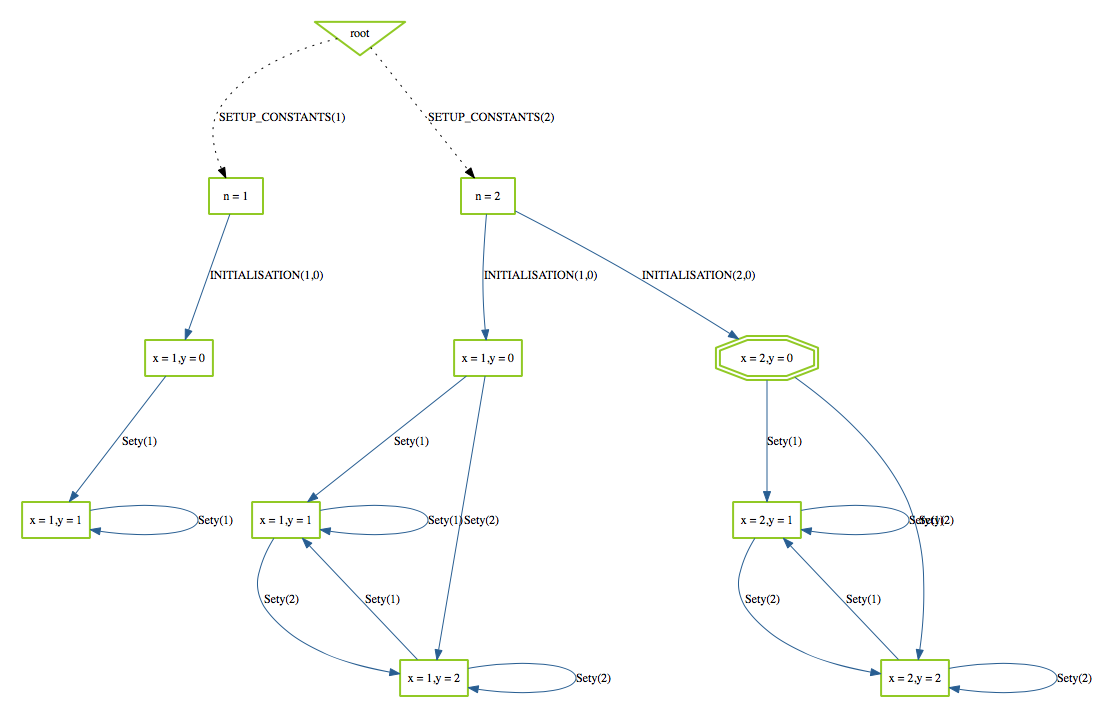

Finding a trace such that BothOverflow is enabled using the model checker will take much more time. Indeed, first one has to set MAXINT to at least 256 so that the value n=256 will eventually be generated. Then one has to set MAX_INITIALISATIONS also to at least 256 so that this value will actually be inspected by the model checker. Finally one has to set MAX_OPERATIONS also to at least 256 to generate yy=256; leading to a (truncated) state space of at least 16,777,216 states. Below is the state space just for the values n=1 and n=2 (which contains no state where BothOverflow is enabled):

Constraint-based Test Case Generation

Constraint based test case generation allows to explore the state space for a machine and generate traces of operations that cover certain user defined operations and meet a given condition to end the search. (See also the bounded model checker of ProB which uses this technique to find invariant violations.)

We will use the following B Machine as an example, which is stored in a file called simplecounter.mch.

MACHINE SimpleCounter

DEFINITIONS MAX==4

VARIABLES count

INVARIANT

count: 1..MAX

INITIALISATION count := 1

OPERATIONS

Increase(y) = PRE y: 1..(MAX-count) THEN

count := count+y

END;

Reset = BEGIN count := 1 END

END

How To run

To start the test case generation there are two alternatives. We can either provide all settings as command line arguments or in a test description file.

From the command line

From the command line there are six relevant settings to configure the test case generation

-cbc_tests Depth EndPredicate File

generate test cases by constraint solving with maximum

length Depth, the last state satisfies EndPredicate

and the test cases are written to File

-cbc_cover Operation

when generating CBC test cases, Operation should be covered. Each

operation to be covered needs to be specified separately.

-cbc_cover_match PartialOpName

just like -cbc_cover but for all operations whose name contains "PartialOpName"

-cbc_cover_final

specifies that the events specified above should only be used as final events in test-cases.

This option can lead to a considerable reduction in running time of the algorithm.

-p CLPFD TRUE

flag to enable the CLPFD constraint solver to search the state space, which is highly recommended.

-p TIME_OUT x

time out in milliseconds to abort the exploration of each possible trace

Example: To generate test cases that cover the event Increase in the machine above, leading to a state where count = MAX-1 is true and the explored paths do not have a depth of more than 5 operations we would specify this as follows, where we use CLPFD and have a timeout of 2000 ms. for each explored trace:

./probcli.sh simplecounter.mch -cbc_tests 5 count=MAX-1 test_results.xml -cbc_cover Increase -p CLPFD true -p TIME_OUT 2000

The generated test cases are stored in a file called test_results.xml. You can provide the empty filename

''

; in this case no file is generated.

Test Generation Description

The configuration for the test case generation can also be provided as an XML file. The format is shown below:

<test-generation-description>

<output-file>OUTPUT FILE NAME</output-file>

<event-coverage>

<event>EVENT 1</event>

<event>EVENT 2</event>

</event-coverage>

<target>TARGET PREDICATE</target>

<!-- the parameters section contains parameters that are very ProB-specific -->

<parameters>

<!-- the maximum depth is the maximum length of a trace of operations/event,

the algorithm might stop earlier when all events are covered -->

<maximum-depth>N</maximum-depth>

<!-- any ProB preference can be set that is listed when calling "probcli -help -v" -->

<!-- other probably interesting preferences are MININT, MAXINT and TIME_OUT -->

</parameters>

</test-generation-description>

Example: For our example the description file would look as follows.

<test-generation-description>

<output-file>test_results.xml</output-file>

<event-coverage>

<event>Increase</event>

</event-coverage>

<target>count = MAX - 1</target>

<parameters>

<maximum-depth>5</maximum-depth>

<!-- Please note: TIME_OUT (in milliseconds) is not a global time out, it is per trace -->

<preference name="CLPFD" value="true"/>

<!-- Please note: TIME_OUT (in milliseconds) is not a global time out, it is per trace -->

<preference name="TIME_OUT" value="2000"/>

</parameters>

</test-generation-description>

Assuming the test description above is stored in file named simple_counter_test_description.xml, we start the test case generation with the following call.

./probcli.sh simplecounter.mch -test_description simple_counter_test_description.xml

References

Constraint-based test-case generation using enabling analysis:

- Aymerick Savary, Marc Frappier, Michael Leuschel. Model-Based Robustness Testing in Event-B Using Mutation. Proceedings SEFM'2015.

Enabling Analysis:

- Ivaylo Dobrikov, Michael Leuschel. Enabling Analysis for Event-B. Sci. Comput. Program. 158: 81-99. 2018.

Explicit test-case generation:

- Sebastian Wieczorek, Vitaly Kozyura, Andreas Roth, Michael Leuschel, Jens Bendisposto, Daniel Plagge, Ina Schieferdecker. Applying Model Checking to Generate Model-Based Integration Tests from Choreography Models. Proceedings FATES 2009, TestCom 2009.